Best Practices for Table-Driven Testing in Golang

Table-driven testing has become a popular technique in Golang for its conciseness, clarity, and efficiency. It removes redundancy and enables testing various cases with minimal code duplication.

Why table-driven testing?

Reduced boilerplate code

Table-driven testing requires less code than other testing techniques, such as parameterized tests or data-driven testing. It eliminates the need for repetitive test case definitions and allows you to focus on the core logic of your function.

Improved readability

By using tables to define test cases, you can easily see what inputs are being tested and what the expected output should be. This makes it easier to understand the behavior of your function and identify potential issues.

Better coverage

Table-driven testing allows you to cover a wide range of inputs and outputs with fewer test cases. This is particularly useful when dealing with complex or edge cases, as they can be easily included in the table.

Easier maintenance

With table-driven testing, you only need to update the input table if there are changes to the function’s behavior. This makes it easier to maintain your tests and ensure that they continue to cover all relevant scenarios.

Improved test data management

Table-driven testing helps manage large amounts of test data by allowing you to define test cases in a structured format. This can be particularly useful when working with datasets that contain multiple rows or columns of input data.

Best practices

Structure your table

Define a dedicated type to represent your test cases. Include fields for input, expected output, and optional descriptive names.

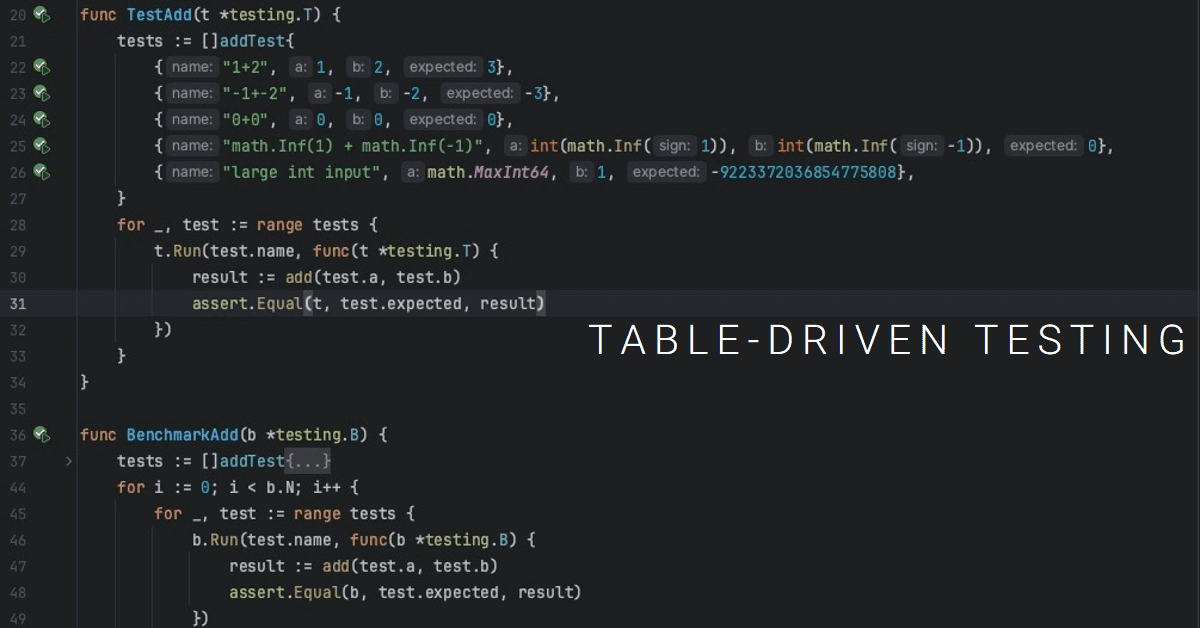

Here is an example of a simple test table for a function that adds two numbers:

|

|

In this example, we have defined a addTest struct that contains the inputs and expected outputs for the add function. We then define a slice of addTest

objects called tests that we use to iterate over in our test loop. For each test case, we call the add function with the input values and compare the result

with the expected output.

Test Edge Cases

Another important aspect of table testing is to ensure that you test edge cases and corner cases. These are the inputs or conditions that may not be covered by your test table. Examples of edge cases include:

- Negative numbers

- Zero values

- NaN (not a number) values

- Large numbers

- Small numbers

- Infinity

- -Infinity

Here is an example of how we can modify our previous test to include edge cases:

|

|

In this example, we have added a new test case that tests what happens when the input values are very large. We use the math.MaxInt64 constant to represent a very

large integer value and add 1 to it to create an even larger input. This test case ensures that our function behaves correctly when working with very large numbers.

Use Benchmarks to Measure Performance

Benchmarking is an important aspect of table-driven unit testing in Go. By using benchmarks, you can measure the performance of your function when working with large amounts of data. This allows you to optimize your function for better performance and ensure that it behaves correctly under different conditions.

Here is an example of how we can modify our previous test to include benchmarking:

|

|

In this example, we have modified our previous test to include benchmarking using the testing.B struct. We define a new BenchmarkAdd function that takes in a

testing.B object and uses it to iterate over our slice of addTest objects. For each test case, we call the add function with the input values and compare the

result with the expected output.

Keep it concise

Limit the table size to a reasonable number of cases. If you have too many, consider splitting them into separate functions or sub-tables.

Name your tests

Name every test case. This improves readability and understanding.

|

|

Focus on the core logic

Avoid complex setup or teardown logic within the table test function. Extract necessary preparations and assertions into helper functions.

Consider subtests

If your table has complex cases, consider using subtests for better error reporting and granular understanding of failures.

Test cases with generics

|

|