Run Stable Diffusion Locally on Mac

Create Amazing Images Using AI

Blockchain FAQs

Blockchain is a decentralized, digital ledger that records transactions across multiple computers in a secure and transparent manner. It uses cryptography to secure and validate transactions, making it a reliable and tamper-proof way to conduct transactions.

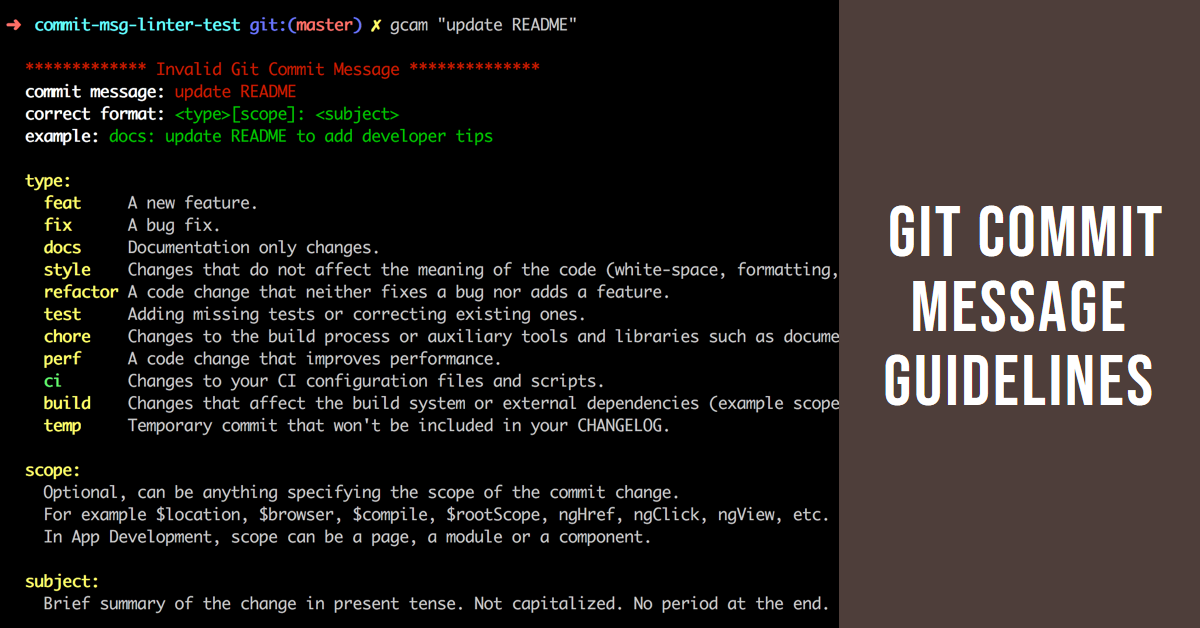

Git Commit Message Guidelines

Git commit message conventions help maintain consistency and clarity in the development process.

Vim Tutorial

Vim is a highly configurable text editor that has been around for decades. It's known for its steep learning curve, but once mastered, it can greatly improve your productivity and efficiency when working with text.

Run LLM Locally

Running LLM (Large Language Model) locally can be a great way to take advantage of its capabilities without needing an internet connection.